In today's fast-paced world, communication has evolved far beyond simple text messages. Voice messaging has become an essential feature in modern chat applications, allowing users to send and receive audio messages effortlessly. Whether you're building a social networking app, a team collaboration tool, or a customer service platform, integrating voice messaging can significantly enhance the user experience.

In this blog post, we'll explore how to implement voice messaging in a Flutter application.

Firstly, we need to grant record permissions in your Flutter application's AndroidManifest.xml file.

(your_app_path/android/app/src/main/AndroidManifest.xml), as shown below:

<uses-permission android:name="android.permission.RECORD_AUDIO"/>

Now we need to add minSdkVersion 24 to (your_app_path/android/app/build.gradle) as shown below:

defaultConfig {

minSdk = flutter.minSdkVersion

minSdkVersion 24

targetSdk = flutter.targetSdkVersion

versionCode = flutterVersionCode.toInteger()

versionName = flutterVersionName

}Now let's create a chat page, The code for that is given below:

import 'dart:async';

import 'dart:io';

import 'package:audio_waveforms/audio_waveforms.dart';

import 'package:flutter/material.dart';

import 'package:path_provider/path_provider.dart';

class SendVoicePage extends StatefulWidget {

const SendVoicePage({super.key, required this.title});

final String title;

@override

State<SendVoicePage> createState() => _SendVoicePageState();

}

class _SendVoicePageState extends State<SendVoicePage> {

bool isRecording = false;

late TextEditingController _textController;

late Directory appDirectory;

late final RecorderController recorderController;

@override

void initState() {

super.initState();

_textController = TextEditingController();recorderController = RecorderController();

_initializeAppDirectory();

}

Future<void> _initializeAppDirectory() async {

appDirectory = await getApplicationDocumentsDirectory();

}

@override

void dispose() {

_textController.dispose();

recorderController.dispose();

super.dispose();

}

@override

Widget build(BuildContext context) {

return Scaffold(

appBar: AppBar(

backgroundColor: Theme.of(context).colorScheme.inversePrimary,

title: Text(widget.title),

),

body: Column(

children: [

Expanded(

child: ListView.builder(

itemCount: 10,

itemBuilder: (_, index) {

if (index.isEven) {

return Column(

);

} else {

return SizedBox(height: 30);

}

},

),

),

SafeArea(

child: Padding(

padding: const EdgeInsets.all(5.0),

child: Row(

children: [

Expanded(

child: AnimatedSwitcher(

duration: const Duration(milliseconds: 200),

child: Container(

width: MediaQuery.of(context).size.width / 1.7,

height: 50,

decoration: BoxDecoration(

color: Colors.white,

borderRadius: BorderRadius.circular(12.0),

),

padding: const EdgeInsets.only(left: 18),

margin: const EdgeInsets.symmetric(horizontal: 15),

child: TextField(

controller: _textController,

decoration: InputDecoration(

hintText: "Type Something...",

hintStyle: const TextStyle(color: Colors.black, fontWeight: FontWeight.bold),

contentPadding: const EdgeInsets.only(top: 16),

border: InputBorder.none,

),

),

),

),

),

IconButton(

onPressed: _startOrStopRecording,

icon: Icons.mic,

color: Colors.blue,

iconSize: 28,

),

],

),

),

),

],

),

);

}

Future<void> _startOrStopRecording() async {

}

}

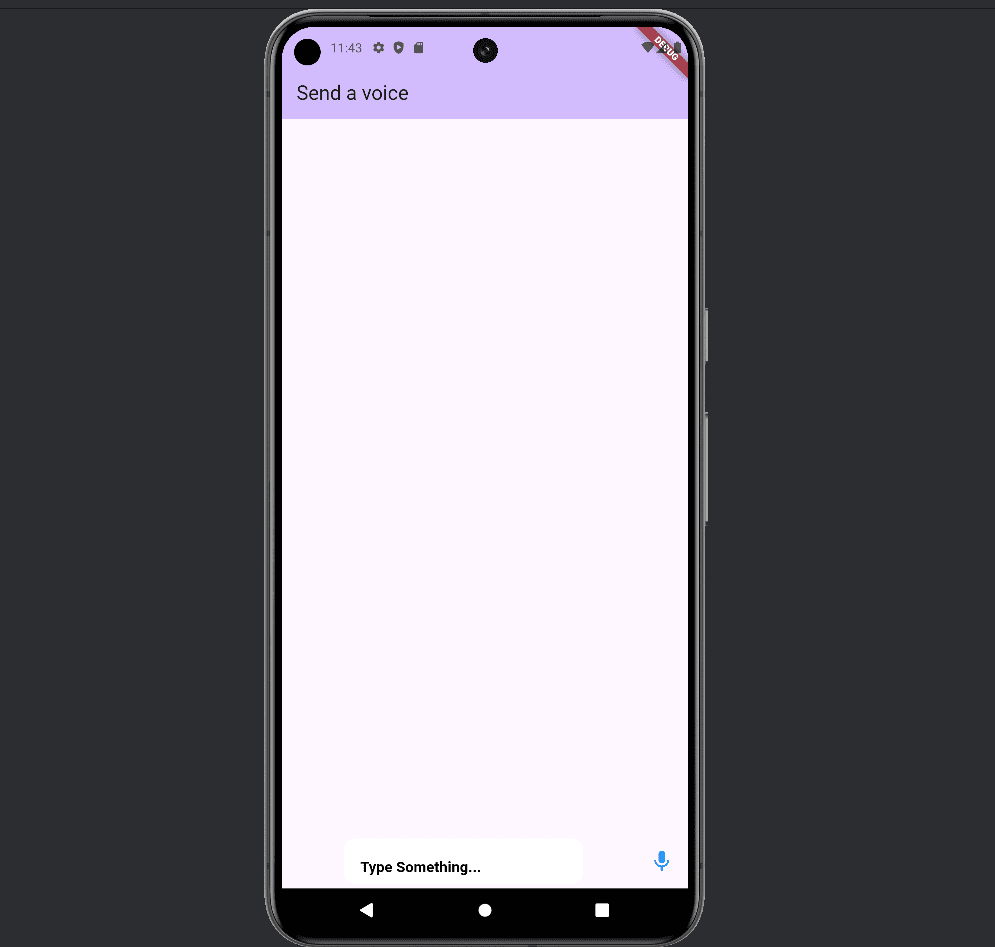

The output of the code is given below:

Now adding a function for voice recording with an audio waveform. To do this, use the audio_waveforms package. Let's start by importing it as shown below:

import 'package:audio_waveforms/audio_waveforms.dart';

Add audio functionality with waveform by clicking on the mic icon. Before that, we need to add a view for it as below:

Stack(

children: [

AudioWaveforms(

enableGesture: true,

size: Size(

MediaQuery.of(context).size.width / 2.2,

50,

),

recorderController: recorderController,

waveStyle: const WaveStyle(

waveColor: Colors.white,

extendWaveform: true,

showMiddleLine: false,

),

decoration: BoxDecoration(

borderRadius: BorderRadius.circular(12.0),

color: Colors.green,

),

padding: const EdgeInsets.only(left: 18),

margin: const EdgeInsets.symmetric(horizontal: 15),

),

],

)

Also, add a send button while the voice is recording, as shown in the code below:

Future<void> _startOrStopRecording() async {

var status = await Permission.microphone.request();

if (status.isGranted) {

if (isRecording) {

_recordingPath = await recorderController.stop(false);

_sendMessageFromRecording();

} else {

final path = '${appDirectory.path}/recording_${DateTime.now().millisecondsSinceEpoch}.aac';

await recorderController.record(path: path);

}

setState(() {

isRecording = !isRecording;

});

}

}* The function first requests microphone permission using the Permission.microphone.request() method. This ensures that the app has access to the device's microphone before attempting to record audio.

* If the app is currently recording (isRecording is true), the function stops the recording by calling recorderController.stop(false). The recorded audio file's path is saved in _recordingPath. After stopping the recording, the function calls _sendMessageFromRecording() to handle the recorded audio, such as sending it as a message.

* If the app is not currently recording (isRecording is false), the function starts a new recording session. A file path is generated for the new recording, using the current timestamp to ensure the file name is unique. The recorder then starts on this path.

_sendMessageFromRecording() is given below:

void _sendMessageFromRecording() {

if (_recordingPath != null) {

setState(() {

_messages.add(ChatMessage(

isAudio: true,

path: _recordingPath!,

isSender: true,

));

});

}

}The code for sending the message list is shown below:

Expanded(

child: ListView.builder(

itemCount: _messages.length * 2,

itemBuilder: (_, index) {

if (index.isEven) {

final messageIndex = index ~/ 2;

final message = _messages[messageIndex];

return Column(

crossAxisAlignment: message.isSender ? CrossAxisAlignment.end : CrossAxisAlignment.start,

children: [

if (message.isAudio)

WaveBubble(

appDirectory: appDirectory,

isSender: message.isSender,

path: message.path,

profilePic: message.profilePic,

),

],

);

} else {

return SizedBox(height: 30);

}

},

),

),

The WaveBubble class is given below:

class WaveBubble extends StatefulWidget {

final bool isSender;

final String? path;

final double? width;

final Directory appDirectory;

final String? profilePic;

const WaveBubble({

Key? key,

required this.appDirectory,

this.width,

this.isSender = false,

this.path,

this.profilePic,

}) : super(key: key);

@override

State<WaveBubble> createState() => _WaveBubbleState();

}

class _WaveBubbleState extends State<WaveBubble> {

late PlayerController controller;

bool isPlaying = false;

final playerWaveStyle = const PlayerWaveStyle(

fixedWaveColor: Colors.white54,

liveWaveColor: Colors.white,

spacing: 6,

);

@override

void initState() {

super.initState();

controller = PlayerController();

_preparePlayer();

controller.onPlayerStateChanged.listen((state) {

if (state == PlayerState.paused || state == PlayerState.stopped) {

setState(() {

isPlaying = false;

});

}

});

}

Future<File> downloadFile(String url, String filename) async {

final response = await http.get(Uri.parse(url));

final dir = await getTemporaryDirectory();

final file = File('${dir.path}/$filename');

return file.writeAsBytes(response.bodyBytes);

}

Future<void> _preparePlayer() async {

if (widget.path != null) {

try {

final file = File(widget.path!);

if (!file.existsSync()) {

final filename = widget.path!.split('/').last;

final localFile = await downloadFile(widget.path!, filename);

await _preparePlayerWithPath(localFile.path);

} else {

await _preparePlayerWithPath(widget.path!);

}

} catch (e) {

print("Error in _preparePlayer: $e");

}

}

}

Future<void> _preparePlayerWithPath(String path) async {

try {

await controller.preparePlayer(

path: path,

shouldExtractWaveform: true,

);

} catch (e) {

debugPrint("Error: $e");

}

}

void _togglePlayPause() async {

if (isPlaying) {

await controller.pausePlayer();

} else {

await controller.startPlayer();

}

setState(() {

isPlaying = !isPlaying;

});

}

@override

void dispose() {

super.dispose();

controller.dispose();

}

@override

Widget build(BuildContext context) {

return widget.path != null

? Align(

alignment: widget.isSender ? Alignment.centerRight : Alignment.centerLeft,

child: Row(

mainAxisSize: MainAxisSize.min,

crossAxisAlignment: CrossAxisAlignment.start,

children: [

if (widget.isSender)

Container(

decoration: BoxDecoration(

borderRadius: BorderRadius.circular(12.0),

color: Colors.green,

),

margin: const EdgeInsets.symmetric(vertical: 4.0, horizontal: 8.0),

child: Row(

mainAxisSize: MainAxisSize.min,

crossAxisAlignment: CrossAxisAlignment.start,

children: [

IconButton(

icon: Icon(

isPlaying ? Icons.pause : Icons.play_arrow,

color: Colors.white,

),

onPressed: _togglePlayPause,

),

Stack(

alignment: Alignment.center,

children: [

AudioFileWaveforms(

playerController: controller,

size: Size(widget.width ?? 150, 50),

enableSeekGesture: true,

playerWaveStyle: playerWaveStyle,

),

],

),

],

),

)

else

Container(

decoration: BoxDecoration(

borderRadius: BorderRadius.circular(12.0),

color: Colors.red,

),

margin: const EdgeInsets.symmetric(vertical: 4.0, horizontal: 8.0),

child: Row(

mainAxisSize: MainAxisSize.min,

crossAxisAlignment: CrossAxisAlignment.start,

children: [

IconButton(

icon: Icon(

isPlaying ? Icons.pause : Icons.play_arrow,

color: Colors.white,

),

onPressed: _togglePlayPause,

),

Stack(

alignment: Alignment.center,

children: [

AudioFileWaveforms(

playerController: controller,

size: Size(widget.width ?? 150, 50),

enableSeekGesture: true,

playerWaveStyle: playerWaveStyle,

),

],

),

Container(

margin: const EdgeInsets.only(right: 8),

width: 40.0,

height: 40.0,

decoration: BoxDecoration(

shape: BoxShape.circle,

image: DecorationImage(

image: widget.profilePic != null

? NetworkImage(widget.profilePic!)

: const AssetImage("assets/images/default_profile.png") as ImageProvider,

fit: BoxFit.cover,

),

),

),

],

),

),

],

),

)

: const SizedBox.shrink();

}

}

class ChatMessage {

final bool isAudio;

final String path;

final bool isSender;

final String? profilePic;

ChatMessage({

required this.isAudio,

required this.path,

required this.isSender,

this.profilePic,

});

}

class AudioPlayerManager {

static final AudioPlayerManager _instance = AudioPlayerManager._internal();

factory AudioPlayerManager() => _instance;

AudioPlayerManager._internal();

PlayerController? _currentController;

void play(PlayerController controller) {

_currentController?.stopPlayer();

_currentController = controller;

controller.startPlayer();

}

void pauseCurrentPlayer() {

_currentController?.pausePlayer();

}

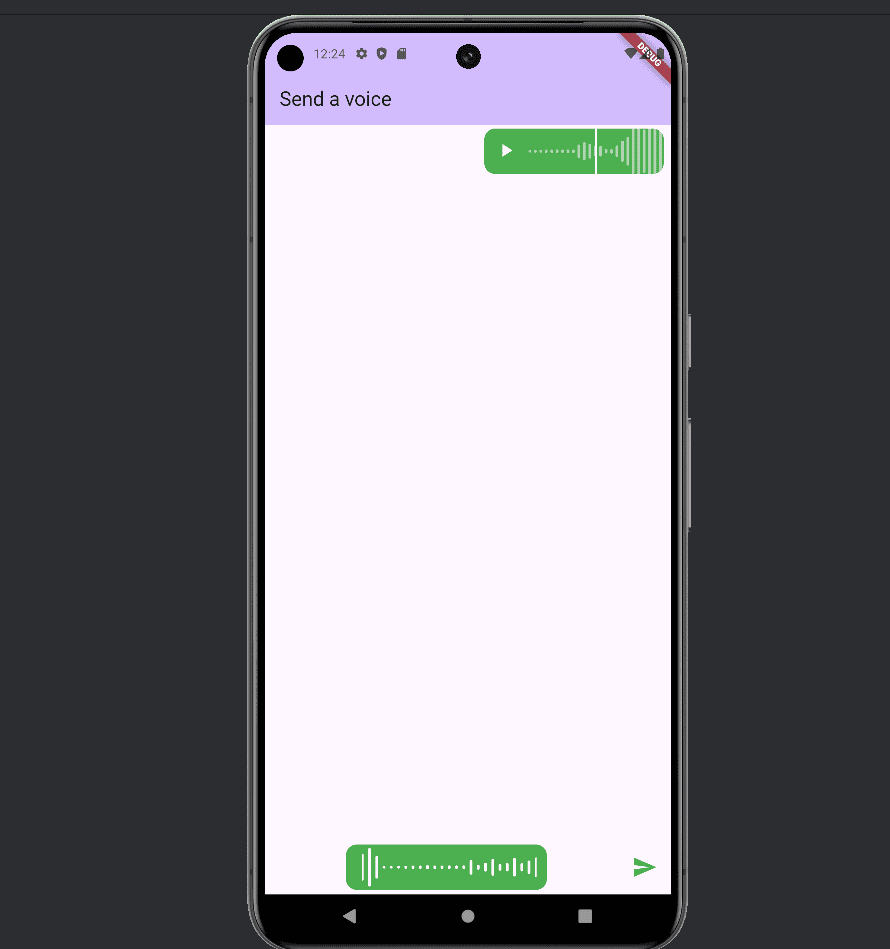

}After adding the WaveBubble, we can play sent voice messages with waveform visualization as below image:

The complete code for recording and playing voice is provided below:

import 'dart:async';

import 'dart:io';

import 'package:audio_waveforms/audio_waveforms.dart';

import 'package:flutter/material.dart';

import 'package:http/http.dart' as http;

import 'package:path_provider/path_provider.dart';

import 'package:permission_handler/permission_handler.dart';

class SendVoicePage extends StatefulWidget {

const SendVoicePage({super.key, required this.title});

final String title;

@override

State<SendVoicePage> createState() => _SendVoicePageState();

}

class _SendVoicePageState extends State<SendVoicePage> {

bool isRecording = false;

late TextEditingController _textController;

late Directory appDirectory;

late List<ChatMessage> _messages;

late final RecorderController recorderController;

String? _recordingPath;

@override

void initState() {

super.initState();

_textController = TextEditingController();

_messages = [];

recorderController = RecorderController();

_initializeAppDirectory();

}

Future<void> _initializeAppDirectory() async {

appDirectory = await getApplicationDocumentsDirectory();

}

@override

void dispose() {

_textController.dispose();

recorderController.dispose();

super.dispose();

}

@override

Widget build(BuildContext context) {

return Scaffold(

appBar: AppBar(

backgroundColor: Theme.of(context).colorScheme.inversePrimary,

title: Text(widget.title),

),

body: Column(

children: [

Expanded(

child: ListView.builder(

itemCount: _messages.length * 2,

itemBuilder: (_, index) {

if (index.isEven) {

final messageIndex = index ~/ 2;

final message = _messages[messageIndex];

return Column(

crossAxisAlignment: message.isSender ? CrossAxisAlignment.end : CrossAxisAlignment.start,

children: [

if (message.isAudio)

WaveBubble(

appDirectory: appDirectory,

isSender: message.isSender,

path: message.path,

profilePic: message.profilePic,

),

],

);

} else {

return SizedBox(height: 30);

}

},

),

),

SafeArea(

child: Padding(

padding: const EdgeInsets.all(5.0),

child: Row(

children: [

Expanded(

child: AnimatedSwitcher(

duration: const Duration(milliseconds: 200),

child: isRecording

? Stack(

children: [

AudioWaveforms(

enableGesture: true,

size: Size(

MediaQuery.of(context).size.width / 2.2,

50,

),

recorderController: recorderController,

waveStyle: const WaveStyle(

waveColor: Colors.white,

extendWaveform: true,

showMiddleLine: false,

),

decoration: BoxDecoration(

borderRadius: BorderRadius.circular(12.0),

color: Colors.green,

),

padding: const EdgeInsets.only(left: 18),

margin: const EdgeInsets.symmetric(horizontal: 15),

),

],

)

: Container(

width: MediaQuery.of(context).size.width / 1.7,

height: 50,

decoration: BoxDecoration(

color: Colors.white,

borderRadius: BorderRadius.circular(12.0),

),

padding: const EdgeInsets.only(left: 18),

margin: const EdgeInsets.symmetric(horizontal: 15),

child: TextField(

controller: _textController,

decoration: InputDecoration(

hintText: "Type Something...",

hintStyle: const TextStyle(color: Colors.black, fontWeight: FontWeight.bold),

contentPadding: const EdgeInsets.only(top: 16),

border: InputBorder.none,

),

),

),

),

),

IconButton(

onPressed: _startOrStopRecording,

icon: Icon(isRecording ? Icons.send : Icons.mic),

color: isRecording ? Colors.green : Colors.blue,

iconSize: 28,

),

],

),

),

),

],

),

);

}

Future<void> _startOrStopRecording() async {

var status = await Permission.microphone.request();

if (status.isGranted) {

if (isRecording) {

_recordingPath = await recorderController.stop(false);

_sendMessageFromRecording();

} else {

final path = '${appDirectory.path}/recording_${DateTime.now().millisecondsSinceEpoch}.aac';

await recorderController.record(path: path);

}

setState(() {

isRecording = !isRecording;

});

}

}

void _sendMessageFromRecording() {

if (_recordingPath != null) {

setState(() {

_messages.add(ChatMessage(

isAudio: true,

path: _recordingPath!,

isSender: true,

));

});

}

}

}

class WaveBubble extends StatefulWidget {

final bool isSender;

final String? path;

final double? width;

final Directory appDirectory;

final String? profilePic;

const WaveBubble({

Key? key,

required this.appDirectory,

this.width,

this.isSender = false,

this.path,

this.profilePic,

}) : super(key: key);

@override

State<WaveBubble> createState() => _WaveBubbleState();

}

class _WaveBubbleState extends State<WaveBubble> {

late PlayerController controller;

bool isPlaying = false;

final playerWaveStyle = const PlayerWaveStyle(

fixedWaveColor: Colors.white54,

liveWaveColor: Colors.white,

spacing: 6,

);

@override

void initState() {

super.initState();

controller = PlayerController();

_preparePlayer();

controller.onPlayerStateChanged.listen((state) {

if (state == PlayerState.paused || state == PlayerState.stopped) {

setState(() {

isPlaying = false;

});

}

});

}

Future<File> downloadFile(String url, String filename) async {

final response = await http.get(Uri.parse(url));

final dir = await getTemporaryDirectory();

final file = File('${dir.path}/$filename');

return file.writeAsBytes(response.bodyBytes);

}

Future<void> _preparePlayer() async {

if (widget.path != null) {

try {

final file = File(widget.path!);

if (!file.existsSync()) {

final filename = widget.path!.split('/').last;

final localFile = await downloadFile(widget.path!, filename);

await _preparePlayerWithPath(localFile.path);

} else {

await _preparePlayerWithPath(widget.path!);

}

} catch (e) {

print("Error in _preparePlayer: $e");

}

}

}

Future<void> _preparePlayerWithPath(String path) async {

try {

await controller.preparePlayer(

path: path,

shouldExtractWaveform: true,

);

} catch (e) {

debugPrint("Error: $e");

}

}

void _togglePlayPause() async {

if (isPlaying) {

await controller.pausePlayer();

} else {

await controller.startPlayer();

}

setState(() {

isPlaying = !isPlaying;

});

}

@override

void dispose() {

super.dispose();

controller.dispose();

}

@override

Widget build(BuildContext context) {

return widget.path != null

? Align(

alignment: widget.isSender ? Alignment.centerRight : Alignment.centerLeft,

child: Row(

mainAxisSize: MainAxisSize.min,

crossAxisAlignment: CrossAxisAlignment.start,

children: [

if (widget.isSender)

Container(

decoration: BoxDecoration(

borderRadius: BorderRadius.circular(12.0),

color: Colors.green,

),

margin: const EdgeInsets.symmetric(vertical: 4.0, horizontal: 8.0),

child: Row(

mainAxisSize: MainAxisSize.min,

crossAxisAlignment: CrossAxisAlignment.start,

children: [

IconButton(

icon: Icon(

isPlaying ? Icons.pause : Icons.play_arrow,

color: Colors.white,

),

onPressed: _togglePlayPause,

),

Stack(

alignment: Alignment.center,

children: [

AudioFileWaveforms(

playerController: controller,

size: Size(widget.width ?? 150, 50),

enableSeekGesture: true,

playerWaveStyle: playerWaveStyle,

),

],

),

],

),

)

else

Container(

decoration: BoxDecoration(

borderRadius: BorderRadius.circular(12.0),

color: Colors.red,

),

margin: const EdgeInsets.symmetric(vertical: 4.0, horizontal: 8.0),

child: Row(

mainAxisSize: MainAxisSize.min,

crossAxisAlignment: CrossAxisAlignment.start,

children: [

IconButton(

icon: Icon(

isPlaying ? Icons.pause : Icons.play_arrow,

color: Colors.white,

),

onPressed: _togglePlayPause,

),

Stack(

alignment: Alignment.center,

children: [

AudioFileWaveforms(

playerController: controller,

size: Size(widget.width ?? 150, 50),

enableSeekGesture: true,

playerWaveStyle: playerWaveStyle,

),

],

),

Container(

margin: const EdgeInsets.only(right: 8),

width: 40.0,

height: 40.0,

decoration: BoxDecoration(

shape: BoxShape.circle,

image: DecorationImage(

image: widget.profilePic != null

? NetworkImage(widget.profilePic!)

: const AssetImage("assets/images/default_profile.png") as ImageProvider,

fit: BoxFit.cover,

),

),

),

],

),

),

],

),

)

: const SizedBox.shrink();

}

}

class ChatMessage {

final bool isAudio;

final String path;

final bool isSender;

final String? profilePic;

ChatMessage({

required this.isAudio,

required this.path,

required this.isSender,

this.profilePic,

});

}

class AudioPlayerManager {

static final AudioPlayerManager _instance = AudioPlayerManager._internal();

factory AudioPlayerManager() => _instance;

AudioPlayerManager._internal();

PlayerController? _currentController;

void play(PlayerController controller) {

_currentController?.stopPlayer();

_currentController = controller;

controller.startPlayer();

}

void pauseCurrentPlayer() {

_currentController?.pausePlayer();

}

}

The output of the corresponding code is shown below:

In this blog, we explore the implementation of voice messaging in Flutter, focusing on integrating the WaveBubble widget to enhance audio playback and waveform visualization. By following the steps, you can integrate voice messaging functionality into your Flutter app to provide users with a rich and interactive experience.

To read more about How to Share a File, Link, & Text Using Flutter, refer to our blog How to Share a File, Link, & Text Using Flutter.