ORM Cache

The Odoo framework gives the ormcache decorator to deal with the in-memory cache. In this

recipe, we will investigate how you can deal with the cache for your functions.

The classes of this ORM cache are accessible at

/odoo/apparatuses/cache.py. To utilize these in any record, you should

import them.

from odoo import tools

Subsequent to bringing in the classes, you can utilize the ORM cache decorators. Odoo

gives various sorts of in-memory cache decorators. We'll investigate every one of these

in the accompanying areas.

ormcache

This one is the simplest and most-used cache decorator. You really want to pass the

arguments name whereupon the method's result depends.The following is an example method

with the ormcache decorator:

@tools.ormcache('key')

def _check_data(self, key):

# some code

return result

When you call this method for the first time, it will be executed, and the result will be

returned. ormcache will store this result based on the value of the

key parameter. When you call the method again with the same

key value, the result will be served from the cache without executing

the actual method.

Sometimes, your method's result depends on the environment attributes. In these cases,

you can declare the method as follows:

@tools.ormcache('self.env.uid', 'key')

def _check_data(self, key):

# some code

return result

The method given in this example will store the cache based on the environment user and

the value of the mode parameter.

ormcache_context

This cache works similarly to ormcache, except that it depends on the

parameters plus the value in the context. In this cache's decorator, you need to pass

the parameter name and a list of context keys. For example, if your method's output

depends on the lang and timezone(tz) keys in the

context, you can use ormcache_context:

@tools.ormcache_context('name', keys=('tz', 'lang'))

def _check_data(self, key):

# some code

return result

The cache in the preceding example will depend on the key argument and

the values of context.

Ormcache multi

Some methods carry out an operation on multiple records or IDs. If you have any desire to

include a cache these sorts of techniques, you can utilize the ormcache_multi decorator.

You really want to pass the multi boundary, and during the function call, the ORM will

create the cache keys by repeating on this boundary. In this technique, you should

return the outcome in a dictionary format design with a component of the multi boundary

as a key. Investigate the accompanying example:

@tools.ormcache_multi('mode', multi='ids')

def _check_data(self, mode,ids):

result = {}

for i in ids:

data = ... # some calculations based on ids

result[i] = data

return result

Suppose we called the preceding method with [1,2,3] as the IDs. The

method will return a result in {1:... , 2:..., 3:... } format. The ORM

will cache the result based on these keys. If you make another call with

[1,2,3,4,5] as the IDs, your method will receive [4,

5] as the ID parameter, so the method will carry out the

operations for the 4 and 5 IDs and the rest of the

result will be served from the cache.

The ORM cache keeps the cache in dictionary format (the cache lookup). The keys of this

cache will be generated based on the signature of the decorated method and the values

will be the result. Put simply, when you call the method with the x, y

parameters and the result of the method is x+y, the cache lookup will

be {(x, y): x+y}. This means that the next time you call this method

with the same parameters, the result will be served directly from this cache. This saves

computation time and makes the response faster.

The ORM cache is an in-memory cache, so it is stored in RAM and occupies memory. Do not

use ormcache to serve large data, such as images or files.

You ought to utilize the ORM cache on pure functions. An pure functions is a technique

that generally returns similar outcome for similar contentions. The result of these

techniques just relies upon the contentions, thus they return a similar outcome. On the

off chance that this isn't true, you really want to physically clear the reserve when

you perform activities that make the cache's state invalid. To clear the reserve, call

the clear_caches() technique:

self.env[model_name].clear_caches()

Once you have cleared the cache, the next call to the method will execute the method and

store the result in the cache, and all subsequent method calls with the same parameter

will be served from the cache.

Least Recently Used (LRU)

The ORM cache is the Least Recently Used (LRU) cache, meaning that if a

key in the cache is not used frequently, it will be removed. If you don't use the ORM

cache properly, it might do more harm than good. For instance, if the argument passed in

a method is always different, then each time Odoo will look in the cache first and then

call the method to compute. If you want to learn how your cache is performing, you can

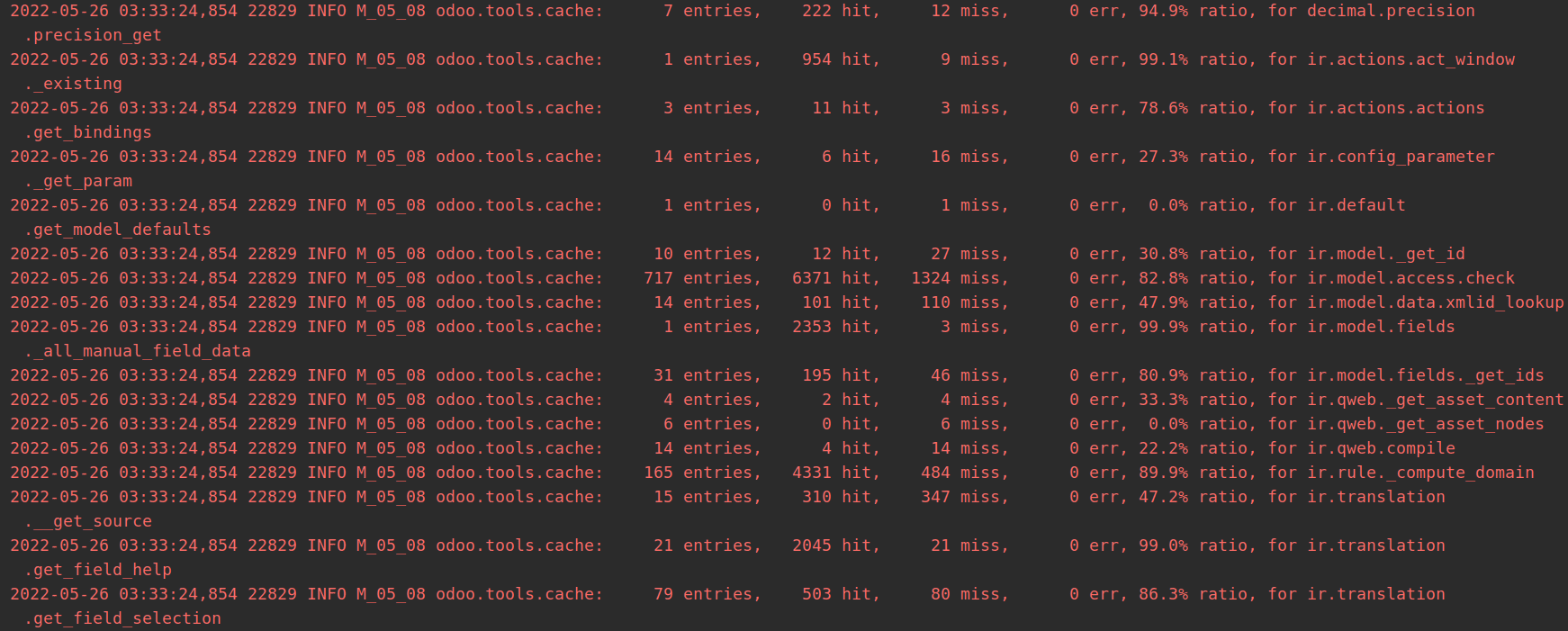

pass the SIGUSR1 signal to the Odoo process:

kill -SIGUSR1 24770

Here, 24770 is the process ID. After executing the command, you will see

the status of the ORM cache in the logs

The percentage in the cache is the hit-to-miss ratio. It's the success ratio of the

result being found in the cache. If the cache's hit-to-miss ratio is too low, you should

remove the ORM cache from the method.